Today, give a try to Techtonique web app, a tool designed to help you make informed, data-driven decisions using Mathematics, Statistics, Machine Learning, and Data Visualization. Here is a tutorial with audio, video, code, and slides: https://moudiki2.gumroad.com/l/nrhgb. 100 API requests are now (and forever) offered to every user every month, no matter the pricing tier.

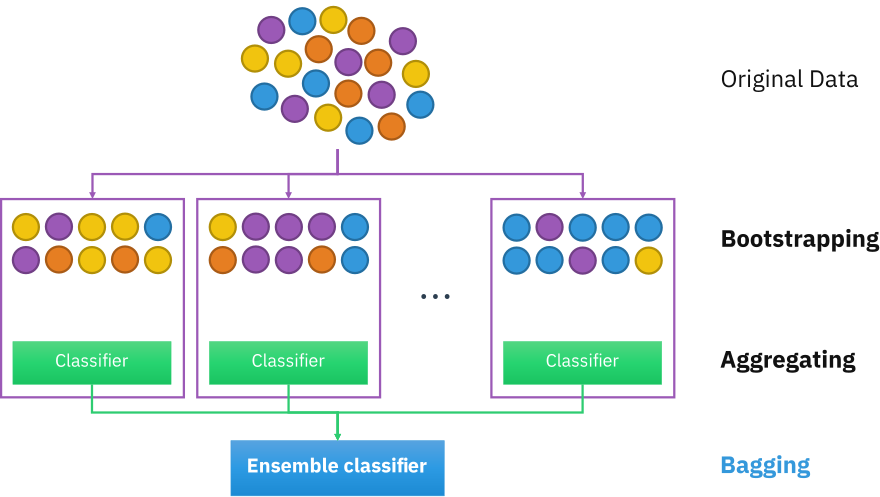

I recently released the genbooster Python package (usable from R), Gradient-Boosting and Boostrap aggregating implementations that use a Rust backend. Any base learner in the ensembles use randomized features as a form of feature engineering. The package was downloaded 3000 times in 5 days, so I guess it’s somehow useful.

The last version of my generic Gradient Boosting algorithm was implemented in Python package mlsauce (see #172, #169, #166, #165), but the package can be difficult to install on some systems. If you’re using Windows, for example, you may want to use the Windows Subsystem for Linux (WSL). Installing directly from PyPI is also nearly impossible, and it needs to be installed directly from GitHub.

This post is a quick overview of genbooster, in Python and R. It was an occasion to “learn”/try the Rust programming language, and I’m happy with the result; a stable package that’s easy to install. However, I wasn’t blown away ( hey Rust guys ;)) by the speed, which is roughly equivalent to Cython (that is, using C under the hood).

Python version

0 - Install Rust utilities (if necessary)¶

!curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh -s -- -y

import os

os.environ['PATH'] = f"/root/.cargo/bin:{os.environ['PATH']}"

!echo $PATH

!rustc --version

!cargo --version

1 - 1 Import packages¶

!pip install genbooster

import numpy as np

import pandas as pd

from matplotlib import pyplot as plt

from sklearn.utils.discovery import all_estimators

from sklearn.datasets import load_iris, load_breast_cancer, load_wine, load_digits

from sklearn.linear_model import Ridge, RidgeCV

from sklearn.tree import ExtraTreeRegressor

from sklearn.model_selection import train_test_split

from genbooster.genboosterclassifier import BoosterClassifier

from genbooster.randombagclassifier import RandomBagClassifier

from sklearn.metrics import mean_squared_error

from tqdm import tqdm

from sklearn.utils.discovery import all_estimators

from time import time

1 - 2 Boosting classifier¶

datasets = [load_iris(return_X_y=True),

load_breast_cancer(return_X_y=True),

load_wine(return_X_y=True),

load_digits(return_X_y=True)]

datasets_names = ['iris', 'breast_cancer', 'wine', 'digits']

# Booster

for dataset, dataset_name in tqdm(zip(datasets, datasets_names)):

print("\n data set ", dataset_name)

accuracy_scores = []

X, y = dataset

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

for estimator in tqdm(all_estimators(type_filter='regressor')):

if (estimator[0] in ["BaggingRegressor", "ExtraTreeRegressor", "DecisionTreeRegressor", "ElasticNetCV", "SVR"]):

print("Estimator: ", estimator[0])

try:

clf = BoosterClassifier(base_estimator=estimator[1]())

start = time()

clf.fit(X_train, y_train)

training_time = time() - start

print(f'\n Time (training): {training_time}')

start = time()

preds = clf.predict(X_test)

inference_time = time() - start

print(f'\n Time (inference): {inference_time}')

print("Accuracy", np.mean(preds == y_test))

accuracy_scores.append((dataset_name, estimator[0], np.mean(preds == y_test),

training_time, inference_time))

except Exception as e:

continue

accuracy_scores_df = pd.DataFrame(accuracy_scores, columns=['dataset', 'estimator', 'accuracy',

'training_time', 'inference_time'])

accuracy_scores_df.sort_values(by='accuracy', ascending=False, inplace=True)

display(accuracy_scores_df)

0it [00:00, ?it/s]

data set iris

0%| | 0/55 [00:00<?, ?it/s]

Estimator: BaggingRegressor Time (training): 7.691629648208618

5%|▌ | 3/55 [00:08<02:22, 2.74s/it]

Time (inference): 0.5218164920806885 Accuracy 1.0 Estimator: DecisionTreeRegressor

11%|█ | 6/55 [00:08<00:58, 1.19s/it]

Time (training): 0.23518085479736328 Time (inference): 0.05045819282531738 Accuracy 1.0 Estimator: ElasticNetCV

16%|█▋ | 9/55 [00:16<01:24, 1.84s/it]

Time (training): 7.839452505111694 Time (inference): 0.0176393985748291 Accuracy 0.9333333333333333 Estimator: ExtraTreeRegressor

18%|█▊ | 10/55 [00:16<01:10, 1.57s/it]

Time (training): 0.28067755699157715 Time (inference): 0.04417252540588379 Accuracy 1.0 Estimator: SVR

100%|██████████| 55/55 [00:17<00:00, 3.10it/s]

Time (training): 0.8663649559020996 Time (inference): 0.1500096321105957 Accuracy 1.0

| dataset | estimator | accuracy | training_time | inference_time | |

|---|---|---|---|---|---|

| 0 | iris | BaggingRegressor | 1.00 | 7.69 | 0.52 |

| 1 | iris | DecisionTreeRegressor | 1.00 | 0.24 | 0.05 |

| 3 | iris | ExtraTreeRegressor | 1.00 | 0.28 | 0.04 |

| 4 | iris | SVR | 1.00 | 0.87 | 0.15 |

| 2 | iris | ElasticNetCV | 0.93 | 7.84 | 0.02 |

1it [00:17, 17.82s/it]

data set breast_cancer

0%| | 0/55 [00:00<?, ?it/s]

Estimator: BaggingRegressor Time (training): 49.687711000442505

5%|▌ | 3/55 [00:50<14:29, 16.72s/it]

Time (inference): 0.46684932708740234 Accuracy 0.9473684210526315 Estimator: DecisionTreeRegressor

11%|█ | 6/55 [00:51<05:50, 7.16s/it]

Time (training): 1.357734203338623 Time (inference): 0.028873205184936523 Accuracy 0.9473684210526315 Estimator: ElasticNetCV

16%|█▋ | 9/55 [01:14<05:40, 7.40s/it]

Time (training): 23.06330966949463 Time (inference): 0.016884803771972656 Accuracy 0.9824561403508771 Estimator: ExtraTreeRegressor

18%|█▊ | 10/55 [01:14<04:36, 6.14s/it]

Time (training): 0.28661274909973145 Time (inference): 0.03186511993408203 Accuracy 0.9736842105263158 Estimator: SVR Time (training): 2.7746951580047607

100%|██████████| 55/55 [01:18<00:00, 1.42s/it]

Time (inference): 0.359896183013916 Accuracy 0.9824561403508771

| dataset | estimator | accuracy | training_time | inference_time | |

|---|---|---|---|---|---|

| 2 | breast_cancer | ElasticNetCV | 0.98 | 23.06 | 0.02 |

| 4 | breast_cancer | SVR | 0.98 | 2.77 | 0.36 |

| 3 | breast_cancer | ExtraTreeRegressor | 0.97 | 0.29 | 0.03 |

| 0 | breast_cancer | BaggingRegressor | 0.95 | 49.69 | 0.47 |

| 1 | breast_cancer | DecisionTreeRegressor | 0.95 | 1.36 | 0.03 |

2it [01:36, 53.34s/it]

data set wine

0%| | 0/55 [00:00<?, ?it/s]

Estimator: BaggingRegressor Time (training): 10.581303596496582

5%|▌ | 3/55 [00:11<03:12, 3.69s/it]

Time (inference): 0.49717187881469727 Accuracy 1.0 Estimator: DecisionTreeRegressor

11%|█ | 6/55 [00:11<01:17, 1.59s/it]

Time (training): 0.3143930435180664 Time (inference): 0.032999515533447266 Accuracy 0.9722222222222222 Estimator: ElasticNetCV

16%|█▋ | 9/55 [00:21<01:53, 2.46s/it]

Time (training): 10.467571020126343 Time (inference): 0.02497076988220215 Accuracy 1.0 Estimator: ExtraTreeRegressor

18%|█▊ | 10/55 [00:22<01:34, 2.10s/it]

Time (training): 0.36039304733276367 Time (inference): 0.06993508338928223 Accuracy 0.9722222222222222 Estimator: SVR

100%|██████████| 55/55 [00:23<00:00, 2.32it/s]

Time (training): 1.1567068099975586 Time (inference): 0.15232062339782715 Accuracy 0.7777777777777778

| dataset | estimator | accuracy | training_time | inference_time | |

|---|---|---|---|---|---|

| 0 | wine | BaggingRegressor | 1.00 | 10.58 | 0.50 |

| 2 | wine | ElasticNetCV | 1.00 | 10.47 | 0.02 |

| 1 | wine | DecisionTreeRegressor | 0.97 | 0.31 | 0.03 |

| 3 | wine | ExtraTreeRegressor | 0.97 | 0.36 | 0.07 |

| 4 | wine | SVR | 0.78 | 1.16 | 0.15 |

3it [01:59, 39.84s/it]

data set digits

0%| | 0/55 [00:00<?, ?it/s]

Estimator: BaggingRegressor Time (training): 528.3990564346313

5%|▌ | 3/55 [08:52<2:33:43, 177.37s/it]

Time (inference): 3.7134416103363037 Accuracy 0.9666666666666667 Estimator: DecisionTreeRegressor Time (training): 12.664016008377075

11%|█ | 6/55 [09:05<1:01:42, 75.57s/it]

Time (inference): 0.2317643165588379 Accuracy 0.9222222222222223 Estimator: ElasticNetCV

16%|█▋ | 9/55 [12:42<56:52, 74.17s/it]

Time (training): 217.41770839691162 Time (inference): 0.12707233428955078 Accuracy 0.9472222222222222 Estimator: ExtraTreeRegressor Time (training): 4.515539646148682

18%|█▊ | 10/55 [12:47<46:21, 61.80s/it]

Time (inference): 0.37054967880249023 Accuracy 0.975 Estimator: SVR Time (training): 140.5957386493683

100%|██████████| 55/55 [15:25<00:00, 16.83s/it]

Time (inference): 17.804856538772583 Accuracy 0.9583333333333334

| dataset | estimator | accuracy | training_time | inference_time | |

|---|---|---|---|---|---|

| 3 | digits | ExtraTreeRegressor | 0.97 | 4.52 | 0.37 |

| 0 | digits | BaggingRegressor | 0.97 | 528.40 | 3.71 |

| 4 | digits | SVR | 0.96 | 140.60 | 17.80 |

| 2 | digits | ElasticNetCV | 0.95 | 217.42 | 0.13 |

| 1 | digits | DecisionTreeRegressor | 0.92 | 12.66 | 0.23 |

4it [17:25, 261.45s/it]

1 - 3 Bagging classifier¶

# Random Bagging

for dataset, dataset_name in zip(datasets, datasets_names):

print("\n data set ", dataset_name)

accuracy_scores = []

X, y = dataset

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

for estimator in tqdm(all_estimators(type_filter='regressor')):

if (estimator[0] in ["BaggingRegressor", "ExtraTreeRegressor", "DecisionTreeRegressor", "ElasticNetCV", "SVR"]):

print("Estimator: ", estimator[0])

try:

# Convert X_train and X_test to NumPy arrays with float64 dtype

X_train = X_train.astype(np.float64)

X_test = X_test.astype(np.float64)

clf = RandomBagClassifier(base_estimator=estimator[1]())

start = time()

clf.fit(X_train, y_train)

training_time = time() - start

print(f'\n Time (training): {training_time}')

start = time()

try: # Nested try-except to catch PanicException during prediction

preds = clf.predict(X_test)

except Exception as pred_e:

if "PanicException" in str(pred_e):

print(f"PanicException during prediction with estimator {estimator[0]}: {pred_e}")

continue # Skip this estimator and move to the next

else:

raise # Re-raise other exceptions

inference_time = time() - start

print("Accuracy", np.mean(preds == y_test))

print(f'\n Time (inference): {inference_time}')

accuracy_scores.append((dataset_name, estimator[0], np.mean(preds == y_test),

training_time, inference_time))

except Exception as e:

print(f"Error with estimator {estimator[0]}: {e}")

continue

accuracy_scores_df = pd.DataFrame(accuracy_scores, columns=['dataset', 'estimator', 'accuracy',

'training_time', 'inference_time'])

accuracy_scores_df.sort_values(by='accuracy', ascending=False, inplace=True)

display(accuracy_scores_df)

data set iris

0%| | 0/55 [00:00<?, ?it/s]

Estimator: BaggingRegressor Time (training): 5.794196128845215

5%|▌ | 3/55 [00:06<01:58, 2.27s/it]

Accuracy 1.0 Time (inference): 1.0278966426849365 Estimator: DecisionTreeRegressor

11%|█ | 6/55 [00:07<00:50, 1.04s/it]

Time (training): 0.41347432136535645 Accuracy 1.0 Time (inference): 0.0855255126953125 Estimator: ElasticNetCV

16%|█▋ | 9/55 [00:29<03:02, 3.97s/it]

Time (training): 22.3568377494812 Accuracy 0.9 Time (inference): 0.04216742515563965 Estimator: ExtraTreeRegressor

18%|█▊ | 10/55 [00:30<02:29, 3.32s/it]

Time (training): 0.24758148193359375 Accuracy 1.0 Time (inference): 0.07839632034301758 Estimator: SVR

100%|██████████| 55/55 [00:30<00:00, 1.80it/s]

Time (training): 0.4286081790924072 Accuracy 0.9666666666666667 Time (inference): 0.09523606300354004

| dataset | estimator | accuracy | training_time | inference_time | |

|---|---|---|---|---|---|

| 0 | iris | BaggingRegressor | 1.00 | 5.79 | 1.03 |

| 1 | iris | DecisionTreeRegressor | 1.00 | 0.41 | 0.09 |

| 3 | iris | ExtraTreeRegressor | 1.00 | 0.25 | 0.08 |

| 4 | iris | SVR | 0.97 | 0.43 | 0.10 |

| 2 | iris | ElasticNetCV | 0.90 | 22.36 | 0.04 |

data set breast_cancer

0%| | 0/55 [00:00<?, ?it/s]

Estimator: BaggingRegressor Time (training): 16.8834490776062

5%|▌ | 3/55 [00:17<05:07, 5.90s/it]

Accuracy 0.956140350877193 Time (inference): 0.8271636962890625 Estimator: DecisionTreeRegressor

11%|█ | 6/55 [00:20<02:22, 2.91s/it]

Time (training): 2.403634548187256 Accuracy 0.9473684210526315 Time (inference): 0.04659414291381836 Estimator: ElasticNetCV

16%|█▋ | 9/55 [01:09<06:56, 9.06s/it]

Time (training): 49.08372521400452 Accuracy 0.9473684210526315 Time (inference): 0.05168628692626953 Estimator: ExtraTreeRegressor

18%|█▊ | 10/55 [01:09<05:38, 7.52s/it]

Time (training): 0.3687095642089844 Accuracy 0.9649122807017544 Time (inference): 0.04958987236022949 Estimator: SVR Time (training): 1.4680569171905518

100%|██████████| 55/55 [01:11<00:00, 1.30s/it]

Accuracy 0.9473684210526315 Time (inference): 0.522120475769043

| dataset | estimator | accuracy | training_time | inference_time | |

|---|---|---|---|---|---|

| 3 | breast_cancer | ExtraTreeRegressor | 0.96 | 0.37 | 0.05 |

| 0 | breast_cancer | BaggingRegressor | 0.96 | 16.88 | 0.83 |

| 1 | breast_cancer | DecisionTreeRegressor | 0.95 | 2.40 | 0.05 |

| 2 | breast_cancer | ElasticNetCV | 0.95 | 49.08 | 0.05 |

| 4 | breast_cancer | SVR | 0.95 | 1.47 | 0.52 |

data set wine

0%| | 0/55 [00:00<?, ?it/s]

Estimator: BaggingRegressor Time (training): 8.266825675964355

5%|▌ | 3/55 [00:09<02:38, 3.04s/it]

Accuracy 0.9722222222222222 Time (inference): 0.85321044921875 Estimator: DecisionTreeRegressor

11%|█ | 6/55 [00:09<01:06, 1.36s/it]

Time (training): 0.46535372734069824 Accuracy 0.8888888888888888 Time (inference): 0.07617998123168945 Estimator: ElasticNetCV

16%|█▋ | 9/55 [00:37<03:49, 5.00s/it]

Time (training): 27.90892767906189 Accuracy 0.9722222222222222 Time (inference): 0.06512594223022461 Estimator: ExtraTreeRegressor

18%|█▊ | 10/55 [00:38<03:07, 4.17s/it]

Time (training): 0.27864670753479004 Accuracy 1.0 Time (inference): 0.0849001407623291 Estimator: SVR

100%|██████████| 55/55 [00:38<00:00, 1.42it/s]

Time (training): 0.5329914093017578 Accuracy 0.7777777777777778 Time (inference): 0.12239527702331543

| dataset | estimator | accuracy | training_time | inference_time | |

|---|---|---|---|---|---|

| 3 | wine | ExtraTreeRegressor | 1.00 | 0.28 | 0.08 |

| 0 | wine | BaggingRegressor | 0.97 | 8.27 | 0.85 |

| 2 | wine | ElasticNetCV | 0.97 | 27.91 | 0.07 |

| 1 | wine | DecisionTreeRegressor | 0.89 | 0.47 | 0.08 |

| 4 | wine | SVR | 0.78 | 0.53 | 0.12 |

data set digits

0%| | 0/55 [00:00<?, ?it/s]

Estimator: BaggingRegressor Time (training): 138.1354341506958

5%|▌ | 3/55 [02:23<41:18, 47.67s/it]

Accuracy 0.95 Time (inference): 4.882359981536865 Estimator: DecisionTreeRegressor Time (training): 19.426659107208252

11%|█ | 6/55 [02:42<19:12, 23.52s/it]

Accuracy 0.875 Time (inference): 0.39545631408691406 Estimator: ElasticNetCV Time (training): 601.1737794876099

16%|█▋ | 9/55 [12:44<1:20:00, 104.36s/it]

Accuracy 0.9444444444444444 Time (inference): 0.49893951416015625 Estimator: ExtraTreeRegressor Time (training): 6.681625127792358

18%|█▊ | 10/55 [12:51<1:05:14, 86.99s/it]

Accuracy 0.9333333333333333 Time (inference): 0.41587328910827637 Estimator: SVR Time (training): 62.861069440841675

100%|██████████| 55/55 [14:12<00:00, 15.50s/it]

Accuracy 0.9277777777777778 Time (inference): 17.88122320175171

| dataset | estimator | accuracy | training_time | inference_time | |

|---|---|---|---|---|---|

| 0 | digits | BaggingRegressor | 0.95 | 138.14 | 4.88 |

| 2 | digits | ElasticNetCV | 0.94 | 601.17 | 0.50 |

| 3 | digits | ExtraTreeRegressor | 0.93 | 6.68 | 0.42 |

| 4 | digits | SVR | 0.93 | 62.86 | 17.88 |

| 1 | digits | DecisionTreeRegressor | 0.88 | 19.43 | 0.40 |

1 - 4 Boosting regression¶

import pandas as pd

from genbooster.genboosterregressor import BoosterRegressor

from genbooster.randombagregressor import RandomBagRegressor

url = "https://raw.githubusercontent.com/Techtonique/datasets/refs/heads/main/tabular/regression/boston_dataset2.csv"

df = pd.read_csv(url)

print(df.head())

X = df.drop(columns=['target', 'training_index'])

y = df['target']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=142)

V0 V1 V2 V3 V4 V5 V6 V7 V8 V9 V10 V11 V12 \ 0 0.01 18.00 2.31 0.00 0.54 6.58 65.20 4.09 1.00 296.00 15.30 396.90 4.98 1 0.03 0.00 7.07 0.00 0.47 6.42 78.90 4.97 2.00 242.00 17.80 396.90 9.14 2 0.03 0.00 7.07 0.00 0.47 7.18 61.10 4.97 2.00 242.00 17.80 392.83 4.03 3 0.03 0.00 2.18 0.00 0.46 7.00 45.80 6.06 3.00 222.00 18.70 394.63 2.94 4 0.07 0.00 2.18 0.00 0.46 7.15 54.20 6.06 3.00 222.00 18.70 396.90 5.33 target training_index 0 24.00 0 1 21.60 1 2 34.70 0 3 33.40 1 4 36.20 1

rmse_scores = []

for estimator in tqdm(all_estimators(type_filter='regressor')):

if (estimator[0] in ["BaggingRegressor", "ExtraTreeRegressor", "DecisionTreeRegressor", "ElasticNetCV", "SVR"]):

print("Estimator: ", estimator[0])

#try:

clf = BoosterRegressor(base_estimator=estimator[1]())

start = time()

clf.fit(X_train, y_train)

training_time = time() - start

print(f'\n Time (training): {training_time}')

start = time()

preds = clf.predict(X_test)

inference_time = time() - start

print(f'\n Time (inference): {inference_time}')

print("RMSE", np.sqrt(np.mean((preds - y_test)**2)))

rmse_scores.append((estimator[0], np.sqrt(np.mean((preds - y_test)**2)),

training_time, inference_time))

#except Exception as e:

# continue

rmse_scores_df = pd.DataFrame(rmse_scores, columns=['estimator', 'RMSE',

'training_time', 'inference_time'])

rmse_scores_df.sort_values(by='RMSE', ascending=True, inplace=True)

display(rmse_scores_df)

0%| | 0/55 [00:00<?, ?it/s]

Estimator: BaggingRegressor Time (training): 8.92781949043274

5%|▌ | 3/55 [00:09<02:39, 3.07s/it]

Time (inference): 0.2669663429260254 RMSE 2.858643903624637 Estimator: DecisionTreeRegressor

11%|█ | 6/55 [00:09<01:07, 1.39s/it]

Time (training): 0.6041898727416992 Time (inference): 0.022806882858276367 RMSE 4.344879007810268 Estimator: ElasticNetCV

16%|█▋ | 9/55 [00:12<00:55, 1.21s/it]

Time (training): 2.9936535358428955 Time (inference): 0.008358955383300781 RMSE 4.224416765751234 Estimator: ExtraTreeRegressor

18%|█▊ | 10/55 [00:13<00:47, 1.05s/it]

Time (training): 0.26199960708618164 Time (inference): 0.02275371551513672 RMSE 2.5375423322614834 Estimator: SVR Time (training): 2.0531556606292725

100%|██████████| 55/55 [00:15<00:00, 3.53it/s]

Time (inference): 0.4060652256011963 RMSE 3.6491765040296373

| estimator | RMSE | training_time | inference_time | |

|---|---|---|---|---|

| 3 | ExtraTreeRegressor | 2.54 | 0.26 | 0.02 |

| 0 | BaggingRegressor | 2.86 | 8.93 | 0.27 |

| 4 | SVR | 3.65 | 2.05 | 0.41 |

| 2 | ElasticNetCV | 4.22 | 2.99 | 0.01 |

| 1 | DecisionTreeRegressor | 4.34 | 0.60 | 0.02 |

1 - 5 Bagging regression¶

rmse_scores = []

for estimator in tqdm(all_estimators(type_filter='regressor')):

if (estimator[0] in ["BaggingRegressor", "ExtraTreeRegressor", "DecisionTreeRegressor", "ElasticNetCV", "SVR"]):

print("Estimator: ", estimator[0])

try:

clf = BoosterRegressor(base_estimator=estimator[1]())

start = time()

clf.fit(X_train, y_train)

training_time = time() - start

print(f'\n Time (training): {training_time}')

start = time()

preds = clf.predict(X_test)

inference_time = time() - start

print(f'\n Time (inference): {inference_time}')

print("RMSE", np.sqrt(np.mean((preds - y_test)**2)))

rmse_scores.append((estimator[0], np.sqrt(np.mean((preds - y_test)**2)),

training_time, inference_time))

except Exception as e:

continue

rmse_scores_df = pd.DataFrame(rmse_scores, columns=['estimator', 'RMSE',

'training_time', 'inference_time'])

rmse_scores_df.sort_values(by='RMSE', ascending=True, inplace=True)

display(rmse_scores_df)

0%| | 0/55 [00:00<?, ?it/s]

Estimator: BaggingRegressor Time (training): 9.518575429916382

5%|▌ | 3/55 [00:09<02:49, 3.26s/it]

Time (inference): 0.2690126895904541 RMSE 2.771679576927226 Estimator: DecisionTreeRegressor

11%|█ | 6/55 [00:10<01:11, 1.47s/it]

Time (training): 0.6097230911254883 Time (inference): 0.021549463272094727 RMSE 4.398489123615693 Estimator: ElasticNetCV

16%|█▋ | 9/55 [00:13<00:57, 1.26s/it]

Time (training): 2.9978723526000977 Time (inference): 0.00686335563659668 RMSE 4.224416765751234 Estimator: ExtraTreeRegressor

18%|█▊ | 10/55 [00:13<00:48, 1.08s/it]

Time (training): 0.25699806213378906 Time (inference): 0.02178335189819336 RMSE 2.655690158719138 Estimator: SVR Time (training): 2.04705548286438

100%|██████████| 55/55 [00:16<00:00, 3.43it/s]

Time (inference): 0.27837133407592773 RMSE 3.6491765040296373

| estimator | RMSE | training_time | inference_time | |

|---|---|---|---|---|

| 3 | ExtraTreeRegressor | 2.66 | 0.26 | 0.02 |

| 0 | BaggingRegressor | 2.77 | 9.52 | 0.27 |

| 4 | SVR | 3.65 | 2.05 | 0.28 |

| 2 | ElasticNetCV | 4.22 | 3.00 | 0.01 |

| 1 | DecisionTreeRegressor | 4.40 | 0.61 | 0.02 |

R version

!mkdir -p ~/.virtualenvs

!python3 -m venv ~/.virtualenvs/r-reticulate

!source ~/.virtualenvs/r-reticulate/bin/activate

!pip install numpy pandas matplotlib scikit-learn tqdm genbooster

utils::install.packages("reticulate")

library(reticulate)

# Use a virtual environment or conda environment to manage Python dependencies

use_virtualenv("r-reticulate", required = TRUE)

# Import required Python libraries

np <- import("numpy")

pd <- import("pandas")

plt <- import("matplotlib.pyplot")

sklearn <- import("sklearn")

tqdm <- import("tqdm")

time <- import("time")

# Import specific modules from sklearn

BoosterRegressor <- import("genbooster.genboosterregressor", convert = FALSE)$BoosterRegressor

BoosterClassifier <- import("genbooster.genboosterclassifier", convert = FALSE)$BoosterClassifier

RandomBagRegressor <- import("genbooster.randombagregressor", convert = FALSE)$RandomBagRegressor

RandomBagClassifier <- import("genbooster.randombagclassifier", convert = FALSE)$RandomBagClassifier

ExtraTreeRegressor <- import("sklearn.tree")$ExtraTreeRegressor

mean_squared_error <- import("sklearn.metrics")$mean_squared_error

train_test_split <- import("sklearn.model_selection")$train_test_split

# Load Boston dataset

url <- "https://raw.githubusercontent.com/Techtonique/datasets/refs/heads/main/tabular/regression/boston_dataset2.csv"

df <- read.csv(url)

# Split dataset into features and target

y <- df[["target"]]

X <- df[colnames(df)[c(-which(colnames(df) == "target"), -which(colnames(df) == "training_index"))]]

# Split into training and testing sets

set.seed(123)

index_train <- sample.int(nrow(df), nrow(df) * 0.8, replace = FALSE)

X_train <- X[index_train, ]

X_test <- X[-index_train,]

y_train <- y[index_train]

y_test <- y[-index_train]

# BoosterRegressor on Boston dataset

regr <- BoosterRegressor(base_estimator = ExtraTreeRegressor())

start <- time$time()

regr$fit(X_train, y_train)

end <- time$time()

cat(sprintf("Time taken: %.2f seconds\n", end - start))

rmse <- np$sqrt(mean_squared_error(y_test, regr$predict(X_test)))

cat(sprintf("BoosterRegressor RMSE: %.2f\n", rmse))

# RandomBagRegressor on Boston dataset

regr <- RandomBagRegressor(base_estimator = ExtraTreeRegressor())

start <- time$time()

regr$fit(X_train, y_train)

end <- time$time()

cat(sprintf("Time taken: %.2f seconds\n", end - start))

rmse <- np$sqrt(mean_squared_error(y_test, regr$predict(X_test)))

cat(sprintf("RandomBagRegressor RMSE: %.2f\n", rmse))

X <- as.matrix(iris[, 1:4])

y <- as.numeric(iris[, 5]) - 1

# Split into training and testing sets

set.seed(123)

index_train <- sample.int(nrow(iris), nrow(iris) * 0.8, replace = FALSE)

X_train <- X[index_train, ]

X_test <- X[-index_train,]

y_train <- y[index_train]

y_test <- y[-index_train]

regr <- BoosterClassifier(base_estimator = ExtraTreeRegressor())

start <- time$time()

regr$fit(X_train, y_train)

end <- time$time()

cat(sprintf("Time taken: %.2f seconds\n", end - start))

accuracy <- mean(y_test == as.numeric(regr$predict(X_test)))

cat(sprintf("BoosterClassifier accuracy: %.2f\n", accuracy))

regr <- RandomBagClassifier(base_estimator = ExtraTreeRegressor())

start <- time$time()

regr$fit(X_train, y_train)

end <- time$time()

cat(sprintf("Time taken: %.2f seconds\n", end - start))

accuracy <- mean(y_test == as.numeric(regr$predict(X_test)))

cat(sprintf("RandomBagClassifier accuracy: %.2f\n", accuracy))

Time taken: 0.39 seconds

BoosterRegressor RMSE: 3.49

Time taken: 0.44 seconds

RandomBagRegressor RMSE: 4.06

Time taken: 0.28 seconds

BoosterClassifier accuracy: 0.97

Time taken: 0.37 seconds

RandomBagClassifier accuracy: 0.97

For attribution, please cite this work as:

T. Moudiki (2025-01-27). Gradient-Boosting and Boostrap aggregating anything (alert: high performance): Part5, easier install and Rust backend. Retrieved from https://thierrymoudiki.github.io/blog/2025/01/27/python/r/genbooster-rust

BibTeX citation (remove empty spaces)

@misc{ tmoudiki20250127,

author = { T. Moudiki },

title = { Gradient-Boosting and Boostrap aggregating anything (alert: high performance): Part5, easier install and Rust backend },

url = { https://thierrymoudiki.github.io/blog/2025/01/27/python/r/genbooster-rust },

year = { 2025 } }

Previous publications

- Understanding Boosted Configuration Networks (combined neural networks and boosting): An Intuitive Guide Through Their Hyperparameters Feb 16, 2026

- R version of Python package survivalist, for model-agnostic survival analysis Feb 9, 2026

- Presenting Lightweight Transfer Learning for Financial Forecasting (Risk 2026) Feb 4, 2026

- Option pricing using time series models as market price of risk Feb 1, 2026

- Enhancing Time Series Forecasting (ahead::ridge2f) with Attention-Based Context Vectors (ahead::contextridge2f) Jan 31, 2026

- Overfitting and scaling (on GPU T4) tests on nnetsauce.CustomRegressor Jan 29, 2026

- Beyond Cross-validation: Hyperparameter Optimization via Generalization Gap Modeling Jan 25, 2026

- GPopt for Machine Learning (hyperparameters' tuning) Jan 21, 2026

- rtopy: an R to Python bridge -- novelties Jan 8, 2026

- Python examples for 'Beyond Nelson-Siegel and splines: A model- agnostic Machine Learning framework for discount curve calibration, interpolation and extrapolation' Jan 3, 2026

- Forecasting benchmark: Dynrmf (a new serious competitor in town) vs Theta Method on M-Competitions and Tourism competitition Jan 1, 2026

- Finally figured out a way to port python packages to R using uv and reticulate: example with nnetsauce Dec 17, 2025

- Overfitting Random Fourier Features: Universal Approximation Property Dec 13, 2025

- Counterfactual Scenario Analysis with ahead::ridge2f Dec 11, 2025

- Zero-Shot Probabilistic Time Series Forecasting with TabPFN 2.5 and nnetsauce Dec 10, 2025

- ARIMA Pricing: Semi-Parametric Market price of risk for Risk-Neutral Pricing (code + preprint) Dec 7, 2025

- Analyzing Paper Reviews with LLMs: I Used ChatGPT, DeepSeek, Qwen, Mistral, Gemini, and Claude (and you should too + publish the analysis) Dec 3, 2025

- tisthemachinelearner: New Workflow with uv for R Integration of scikit-learn Dec 1, 2025

- (ICYMI) RPweave: Unified R + Python + LaTeX System using uv Nov 21, 2025

- unifiedml: A Unified Machine Learning Interface for R, is now on CRAN + Discussion about AI replacing humans Nov 16, 2025

- Context-aware Theta forecasting Method: Extending Classical Time Series Forecasting with Machine Learning Nov 13, 2025

- unifiedml in R: A Unified Machine Learning Interface Nov 5, 2025

- Deterministic Shift Adjustment in Arbitrage-Free Pricing (historical to risk-neutral short rates) Oct 28, 2025

- New instantaneous short rates models with their deterministic shift adjustment, for historical and risk-neutral simulation Oct 27, 2025

- RPweave: Unified R + Python + LaTeX System using uv Oct 19, 2025

- GAN-like Synthetic Data Generation Examples (on univariate, multivariate distributions, digits recognition, Fashion-MNIST, stock returns, and Olivetti faces) with DistroSimulator Oct 19, 2025

- Part2 of More data (> 150 files) on T. Moudiki's situation: a riddle/puzzle (including R, Python, bash interfaces to the game -- but everyone can play) Oct 16, 2025

- More data (> 150 files) on T. Moudiki's situation: a riddle/puzzle (including R, Python, bash interfaces to the game -- but everyone can play) Oct 12, 2025

- R port of llama2.c Oct 9, 2025

- Native uncertainty quantification for time series with NGBoost Oct 8, 2025

- NGBoost (Natural Gradient Boosting) for Regression, Classification, Time Series forecasting and Reserving Oct 6, 2025

- Real-time pricing with a pretrained probabilistic stock return model Oct 1, 2025

- Combining any model with GARCH(1,1) for probabilistic stock forecasting Sep 23, 2025

- Generating Synthetic Data with R-vine Copulas using esgtoolkit in R Sep 21, 2025

- Reimagining Equity Solvency Capital Requirement Approximation (one of my Master's Thesis subjects): From Bilinear Interpolation to Probabilistic Machine Learning Sep 16, 2025

- Transfer Learning using ahead::ridge2f on synthetic stocks returns Pt.2: synthetic data generation Sep 9, 2025

- Transfer Learning using ahead::ridge2f on synthetic stocks returns Sep 8, 2025

- I'm supposed to present 'Conformal Predictive Simulations for Univariate Time Series' at COPA CONFERENCE 2025 in London... Sep 4, 2025

- external regressors in ahead::dynrmf's interface for Machine learning forecasting Sep 1, 2025

- Another interesting decision, now for 'Beyond Nelson-Siegel and splines: A model-agnostic Machine Learning framework for discount curve calibration, interpolation and extrapolation' Aug 20, 2025

- Boosting any randomized based learner for regression, classification and univariate/multivariate time series forcasting Jul 26, 2025

- New nnetsauce version with CustomBackPropRegressor (CustomRegressor with Backpropagation) and ElasticNet2Regressor (Ridge2 with ElasticNet regularization) Jul 15, 2025

- mlsauce (home to a model-agnostic gradient boosting algorithm) can now be installed from PyPI. Jul 10, 2025

- A user-friendly graphical interface to techtonique dot net's API (will eventually contain graphics). Jul 8, 2025

- Calling =TECHTO_MLCLASSIFICATION for Machine Learning supervised CLASSIFICATION in Excel is just a matter of copying and pasting Jul 7, 2025

- Calling =TECHTO_MLREGRESSION for Machine Learning supervised regression in Excel is just a matter of copying and pasting Jul 6, 2025

- Calling =TECHTO_RESERVING and =TECHTO_MLRESERVING for claims triangle reserving in Excel is just a matter of copying and pasting Jul 5, 2025

- Calling =TECHTO_SURVIVAL for Survival Analysis in Excel is just a matter of copying and pasting Jul 4, 2025

- Calling =TECHTO_SIMULATION for Stochastic Simulation in Excel is just a matter of copying and pasting Jul 3, 2025

- Calling =TECHTO_FORECAST for forecasting in Excel is just a matter of copying and pasting Jul 2, 2025

- Random Vector Functional Link (RVFL) artificial neural network with 2 regularization parameters successfully used for forecasting/synthetic simulation in professional settings: Extensions (including Bayesian) Jul 1, 2025

- R version of 'Backpropagating quasi-randomized neural networks' Jun 24, 2025

- Backpropagating quasi-randomized neural networks Jun 23, 2025

- Beyond ARMA-GARCH: leveraging any statistical model for volatility forecasting Jun 21, 2025

- Stacked generalization (Machine Learning model stacking) + conformal prediction for forecasting with ahead::mlf Jun 18, 2025

- An Overfitting dilemma: XGBoost Default Hyperparameters vs GenericBooster + LinearRegression Default Hyperparameters Jun 14, 2025

- Programming language-agnostic reserving using RidgeCV, LightGBM, XGBoost, and ExtraTrees Machine Learning models Jun 13, 2025

- Exceptionally, and on a more personal note (otherwise I may get buried alive)... Jun 10, 2025

- Free R, Python and SQL editors in techtonique dot net Jun 9, 2025

- Beyond Nelson-Siegel and splines: A model-agnostic Machine Learning framework for discount curve calibration, interpolation and extrapolation Jun 7, 2025

- scikit-learn, glmnet, xgboost, lightgbm, pytorch, keras, nnetsauce in probabilistic Machine Learning (for longitudinal data) Reserving (work in progress) Jun 6, 2025

- R version of Probabilistic Machine Learning (for longitudinal data) Reserving (work in progress) Jun 5, 2025

- Probabilistic Machine Learning (for longitudinal data) Reserving (work in progress) Jun 4, 2025

- Python version of Beyond ARMA-GARCH: leveraging model-agnostic Quasi-Randomized networks and conformal prediction for nonparametric probabilistic stock forecasting (ML-ARCH) Jun 3, 2025

- Beyond ARMA-GARCH: leveraging model-agnostic Machine Learning and conformal prediction for nonparametric probabilistic stock forecasting (ML-ARCH) Jun 2, 2025

- Permutations and SHAPley values for feature importance in techtonique dot net's API (with R + Python + the command line) Jun 1, 2025

- Which patient is going to survive longer? Another guide to using techtonique dot net's API (with R + Python + the command line) for survival analysis May 31, 2025

- A Guide to Using techtonique.net's API and rush for simulating and plotting Stochastic Scenarios May 30, 2025

- Simulating Stochastic Scenarios with Diffusion Models: A Guide to Using techtonique.net's API for the purpose May 29, 2025

- Will my apartment in 5th avenue be overpriced or not? Harnessing the power of www.techtonique.net (+ xgboost, lightgbm, catboost) to find out May 28, 2025

- How long must I wait until something happens: A Comprehensive Guide to Survival Analysis via an API May 27, 2025

- Harnessing the Power of techtonique.net: A Comprehensive Guide to Machine Learning Classification via an API May 26, 2025

- Quantile regression with any regressor -- Examples with RandomForestRegressor, RidgeCV, KNeighborsRegressor May 20, 2025

- Survival stacking: survival analysis translated as supervised classification in R and Python May 5, 2025

- 'Bayesian' optimization of hyperparameters in a R machine learning model using the bayesianrvfl package Apr 25, 2025

- A lightweight interface to scikit-learn in R: Bayesian and Conformal prediction Apr 21, 2025

- A lightweight interface to scikit-learn in R Pt.2: probabilistic time series forecasting in conjunction with ahead::dynrmf Apr 20, 2025

- Extending the Theta forecasting method to GLMs, GAMs, GLMBOOST and attention: benchmarking on Tourism, M1, M3 and M4 competition data sets (28000 series) Apr 14, 2025

- Extending the Theta forecasting method to GLMs and attention Apr 8, 2025

- Nonlinear conformalized Generalized Linear Models (GLMs) with R package 'rvfl' (and other models) Mar 31, 2025

- Probabilistic Time Series Forecasting (predictive simulations) in Microsoft Excel using Python, xlwings lite and www.techtonique.net Mar 28, 2025

- Conformalize (improved prediction intervals and simulations) any R Machine Learning model with misc::conformalize Mar 25, 2025

- My poster for the 18th FINANCIAL RISKS INTERNATIONAL FORUM by Institut Louis Bachelier/Fondation du Risque/Europlace Institute of Finance Mar 19, 2025

- Interpretable probabilistic kernel ridge regression using Matérn 3/2 kernels Mar 16, 2025

- (News from) Probabilistic Forecasting of univariate and multivariate Time Series using Quasi-Randomized Neural Networks (Ridge2) and Conformal Prediction Mar 9, 2025

- Word-Online: re-creating Karpathy's char-RNN (with supervised linear online learning of word embeddings) for text completion Mar 8, 2025

- CRAN-like repository for most recent releases of Techtonique's R packages Mar 2, 2025

- Presenting 'Online Probabilistic Estimation of Carbon Beta and Carbon Shapley Values for Financial and Climate Risk' at Institut Louis Bachelier Feb 27, 2025

- Web app with DeepSeek R1 and Hugging Face API for chatting Feb 23, 2025

- tisthemachinelearner: A Lightweight interface to scikit-learn with 2 classes, Classifier and Regressor (in Python and R) Feb 17, 2025

- R version of survivalist: Probabilistic model-agnostic survival analysis using scikit-learn, xgboost, lightgbm (and conformal prediction) Feb 12, 2025

- Model-agnostic global Survival Prediction of Patients with Myeloid Leukemia in QRT/Gustave Roussy Challenge (challengedata.ens.fr): Python's survivalist Quickstart Feb 10, 2025

- A simple test of the martingale hypothesis in esgtoolkit Feb 3, 2025

- Command Line Interface (CLI) for techtonique.net's API Jan 31, 2025

- Gradient-Boosting and Boostrap aggregating anything (alert: high performance): Part5, easier install and Rust backend Jan 27, 2025

- Just got a paper on conformal prediction REJECTED by International Journal of Forecasting despite evidence on 30,000 time series (and more). What's going on? Part2: 1311 time series from the Tourism competition Jan 20, 2025

- Techtonique is out! (with a tutorial in various programming languages and formats) Jan 14, 2025

- Univariate and Multivariate Probabilistic Forecasting with nnetsauce and TabPFN Jan 14, 2025

- Just got a paper on conformal prediction REJECTED by International Journal of Forecasting despite evidence on 30,000 time series (and more). What's going on? Jan 5, 2025

- Python and Interactive dashboard version of Stock price forecasting with Deep Learning: throwing power at the problem (and why it won't make you rich) Dec 31, 2024

- Stock price forecasting with Deep Learning: throwing power at the problem (and why it won't make you rich) Dec 29, 2024

- No-code Machine Learning Cross-validation and Interpretability in techtonique.net Dec 23, 2024

- survivalist: Probabilistic model-agnostic survival analysis using scikit-learn, glmnet, xgboost, lightgbm, pytorch, keras, nnetsauce and mlsauce Dec 15, 2024

- Model-agnostic 'Bayesian' optimization (for hyperparameter tuning) using conformalized surrogates in GPopt Dec 9, 2024

- You can beat Forecasting LLMs (Large Language Models a.k.a foundation models) with nnetsauce.MTS Pt.2: Generic Gradient Boosting Dec 1, 2024

- You can beat Forecasting LLMs (Large Language Models a.k.a foundation models) with nnetsauce.MTS Nov 24, 2024

- Unified interface and conformal prediction (calibrated prediction intervals) for R package forecast (and 'affiliates') Nov 23, 2024

- GLMNet in Python: Generalized Linear Models Nov 18, 2024

- Gradient-Boosting anything (alert: high performance): Part4, Time series forecasting Nov 10, 2024

- Predictive scenarios simulation in R, Python and Excel using Techtonique API Nov 3, 2024

- Chat with your tabular data in www.techtonique.net Oct 30, 2024

- Gradient-Boosting anything (alert: high performance): Part3, Histogram-based boosting Oct 28, 2024

- R editor and SQL console (in addition to Python editors) in www.techtonique.net Oct 21, 2024

- R and Python consoles + JupyterLite in www.techtonique.net Oct 15, 2024

- Gradient-Boosting anything (alert: high performance): Part2, R version Oct 14, 2024

- Gradient-Boosting anything (alert: high performance) Oct 6, 2024

- Benchmarking 30 statistical/Machine Learning models on the VN1 Forecasting -- Accuracy challenge Oct 4, 2024

- Automated random variable distribution inference using Kullback-Leibler divergence and simulating best-fitting distribution Oct 2, 2024

- Forecasting in Excel using Techtonique's Machine Learning APIs under the hood Sep 30, 2024

- Techtonique web app for data-driven decisions using Mathematics, Statistics, Machine Learning, and Data Visualization Sep 25, 2024

- Parallel for loops (Map or Reduce) + New versions of nnetsauce and ahead Sep 16, 2024

- Adaptive (online/streaming) learning with uncertainty quantification using Polyak averaging in learningmachine Sep 10, 2024

- New versions of nnetsauce and ahead Sep 9, 2024

- Prediction sets and prediction intervals for conformalized Auto XGBoost, Auto LightGBM, Auto CatBoost, Auto GradientBoosting Sep 2, 2024

- Quick/automated R package development workflow (assuming you're using macOS or Linux) Part2 Aug 30, 2024

- R package development workflow (assuming you're using macOS or Linux) Aug 27, 2024

- A new method for deriving a nonparametric confidence interval for the mean Aug 26, 2024

- Conformalized adaptive (online/streaming) learning using learningmachine in Python and R Aug 19, 2024

- Bayesian (nonlinear) adaptive learning Aug 12, 2024

- Auto XGBoost, Auto LightGBM, Auto CatBoost, Auto GradientBoosting Aug 5, 2024

- Copulas for uncertainty quantification in time series forecasting Jul 28, 2024

- Forecasting uncertainty: sequential split conformal prediction + Block bootstrap (web app) Jul 22, 2024

- learningmachine for Python (new version) Jul 15, 2024

- learningmachine v2.0.0: Machine Learning with explanations and uncertainty quantification Jul 8, 2024

- My presentation at ISF 2024 conference (slides with nnetsauce probabilistic forecasting news) Jul 3, 2024

- 10 uncertainty quantification methods in nnetsauce forecasting Jul 1, 2024

- Forecasting with XGBoost embedded in Quasi-Randomized Neural Networks Jun 24, 2024

- Forecasting Monthly Airline Passenger Numbers with Quasi-Randomized Neural Networks Jun 17, 2024

- Automated hyperparameter tuning using any conformalized surrogate Jun 9, 2024

- Recognizing handwritten digits with Ridge2Classifier Jun 3, 2024

- Forecasting the Economy May 27, 2024

- A detailed introduction to Deep Quasi-Randomized 'neural' networks May 19, 2024

- Probability of receiving a loan; using learningmachine May 12, 2024

- mlsauce's `v0.18.2`: various examples and benchmarks with dimension reduction May 6, 2024

- mlsauce's `v0.17.0`: boosting with Elastic Net, polynomials and heterogeneity in explanatory variables Apr 29, 2024

- mlsauce's `v0.13.0`: taking into account inputs heterogeneity through clustering Apr 21, 2024

- mlsauce's `v0.12.0`: prediction intervals for LSBoostRegressor Apr 15, 2024

- Conformalized predictive simulations for univariate time series on more than 250 data sets Apr 7, 2024

- learningmachine v1.1.2: for Python Apr 1, 2024

- learningmachine v1.0.0: prediction intervals around the probability of the event 'a tumor being malignant' Mar 25, 2024

- Bayesian inference and conformal prediction (prediction intervals) in nnetsauce v0.18.1 Mar 18, 2024

- Multiple examples of Machine Learning forecasting with ahead Mar 11, 2024

- rtopy (v0.1.1): calling R functions in Python Mar 4, 2024

- ahead forecasting (v0.10.0): fast time series model calibration and Python plots Feb 26, 2024

- A plethora of datasets at your fingertips Part3: how many times do couples cheat on each other? Feb 19, 2024

- nnetsauce's introduction as of 2024-02-11 (new version 0.17.0) Feb 11, 2024

- Tuning Machine Learning models with GPopt's new version Part 2 Feb 5, 2024

- Tuning Machine Learning models with GPopt's new version Jan 29, 2024

- Subsampling continuous and discrete response variables Jan 22, 2024

- DeepMTS, a Deep Learning Model for Multivariate Time Series Jan 15, 2024

- A classifier that's very accurate (and deep) Pt.2: there are > 90 classifiers in nnetsauce Jan 8, 2024

- learningmachine: prediction intervals for conformalized Kernel ridge regression and Random Forest Jan 1, 2024

- A plethora of datasets at your fingertips Part2: how many times do couples cheat on each other? Descriptive analytics, interpretability and prediction intervals using conformal prediction Dec 25, 2023

- Diffusion models in Python with esgtoolkit (Part2) Dec 18, 2023

- Diffusion models in Python with esgtoolkit Dec 11, 2023

- Julia packaging at the command line Dec 4, 2023

- Quasi-randomized nnetworks in Julia, Python and R Nov 27, 2023

- A plethora of datasets at your fingertips Nov 20, 2023

- A classifier that's very accurate (and deep) Nov 12, 2023

- mlsauce version 0.8.10: Statistical/Machine Learning with Python and R Nov 5, 2023

- AutoML in nnetsauce (randomized and quasi-randomized nnetworks) Pt.2: multivariate time series forecasting Oct 29, 2023

- AutoML in nnetsauce (randomized and quasi-randomized nnetworks) Oct 22, 2023

- Version v0.14.0 of nnetsauce for R and Python Oct 16, 2023

- A diffusion model: G2++ Oct 9, 2023

- Diffusion models in ESGtoolkit + announcements Oct 2, 2023

- An infinity of time series forecasting models in nnetsauce (Part 2 with uncertainty quantification) Sep 25, 2023

- (News from) forecasting in Python with ahead (progress bars and plots) Sep 18, 2023

- Forecasting in Python with ahead Sep 11, 2023

- Risk-neutralize simulations Sep 4, 2023

- Comparing cross-validation results using crossval_ml and boxplots Aug 27, 2023

- Reminder Apr 30, 2023

- Did you ask ChatGPT about who you are? Apr 16, 2023

- A new version of nnetsauce (randomized and quasi-randomized 'neural' networks) Apr 2, 2023

- Simple interfaces to the forecasting API Nov 23, 2022

- A web application for forecasting in Python, R, Ruby, C#, JavaScript, PHP, Go, Rust, Java, MATLAB, etc. Nov 2, 2022

- Prediction intervals (not only) for Boosted Configuration Networks in Python Oct 5, 2022

- Boosted Configuration (neural) Networks Pt. 2 Sep 3, 2022

- Boosted Configuration (_neural_) Networks for classification Jul 21, 2022

- A Machine Learning workflow using Techtonique Jun 6, 2022

- Super Mario Bros © in the browser using PyScript May 8, 2022

- News from ESGtoolkit, ycinterextra, and nnetsauce Apr 4, 2022

- Explaining a Keras _neural_ network predictions with the-teller Mar 11, 2022

- New version of nnetsauce -- various quasi-randomized networks Feb 12, 2022

- A dashboard illustrating bivariate time series forecasting with `ahead` Jan 14, 2022

- Hundreds of Statistical/Machine Learning models for univariate time series, using ahead, ranger, xgboost, and caret Dec 20, 2021

- Forecasting with `ahead` (Python version) Dec 13, 2021

- Tuning and interpreting LSBoost Nov 15, 2021

- Time series cross-validation using `crossvalidation` (Part 2) Nov 7, 2021

- Fast and scalable forecasting with ahead::ridge2f Oct 31, 2021

- Automatic Forecasting with `ahead::dynrmf` and Ridge regression Oct 22, 2021

- Forecasting with `ahead` Oct 15, 2021

- Classification using linear regression Sep 26, 2021

- `crossvalidation` and random search for calibrating support vector machines Aug 6, 2021

- parallel grid search cross-validation using `crossvalidation` Jul 31, 2021

- `crossvalidation` on R-universe, plus a classification example Jul 23, 2021

- Documentation and source code for GPopt, a package for Bayesian optimization Jul 2, 2021

- Hyperparameters tuning with GPopt Jun 11, 2021

- A forecasting tool (API) with examples in curl, R, Python May 28, 2021

- Bayesian Optimization with GPopt Part 2 (save and resume) Apr 30, 2021

- Bayesian Optimization with GPopt Apr 16, 2021

- Compatibility of nnetsauce and mlsauce with scikit-learn Mar 26, 2021

- Explaining xgboost predictions with the teller Mar 12, 2021

- An infinity of time series models in nnetsauce Mar 6, 2021

- New activation functions in mlsauce's LSBoost Feb 12, 2021

- 2020 recap, Gradient Boosting, Generalized Linear Models, AdaOpt with nnetsauce and mlsauce Dec 29, 2020

- A deeper learning architecture in nnetsauce Dec 18, 2020

- Classify penguins with nnetsauce's MultitaskClassifier Dec 11, 2020

- Bayesian forecasting for uni/multivariate time series Dec 4, 2020

- Generalized nonlinear models in nnetsauce Nov 28, 2020

- Boosting nonlinear penalized least squares Nov 21, 2020

- Statistical/Machine Learning explainability using Kernel Ridge Regression surrogates Nov 6, 2020

- NEWS Oct 30, 2020

- A glimpse into my PhD journey Oct 23, 2020

- Submitting R package to CRAN Oct 16, 2020

- Simulation of dependent variables in ESGtoolkit Oct 9, 2020

- Forecasting lung disease progression Oct 2, 2020

- New nnetsauce Sep 25, 2020

- Technical documentation Sep 18, 2020

- A new version of nnetsauce, and a new Techtonique website Sep 11, 2020

- Back next week, and a few announcements Sep 4, 2020

- Explainable 'AI' using Gradient Boosted randomized networks Pt2 (the Lasso) Jul 31, 2020

- LSBoost: Explainable 'AI' using Gradient Boosted randomized networks (with examples in R and Python) Jul 24, 2020

- nnetsauce version 0.5.0, randomized neural networks on GPU Jul 17, 2020

- Maximizing your tip as a waiter (Part 2) Jul 10, 2020

- New version of mlsauce, with Gradient Boosted randomized networks and stump decision trees Jul 3, 2020

- Announcements Jun 26, 2020

- Parallel AdaOpt classification Jun 19, 2020

- Comments section and other news Jun 12, 2020

- Maximizing your tip as a waiter Jun 5, 2020

- AdaOpt classification on MNIST handwritten digits (without preprocessing) May 29, 2020

- AdaOpt (a probabilistic classifier based on a mix of multivariable optimization and nearest neighbors) for R May 22, 2020

- AdaOpt May 15, 2020

- Custom errors for cross-validation using crossval::crossval_ml May 8, 2020

- Documentation+Pypi for the `teller`, a model-agnostic tool for Machine Learning explainability May 1, 2020

- Encoding your categorical variables based on the response variable and correlations Apr 24, 2020

- Linear model, xgboost and randomForest cross-validation using crossval::crossval_ml Apr 17, 2020

- Grid search cross-validation using crossval Apr 10, 2020

- Documentation for the querier, a query language for Data Frames Apr 3, 2020

- Time series cross-validation using crossval Mar 27, 2020

- On model specification, identification, degrees of freedom and regularization Mar 20, 2020

- Import data into the querier (now on Pypi), a query language for Data Frames Mar 13, 2020

- R notebooks for nnetsauce Mar 6, 2020

- Version 0.4.0 of nnetsauce, with fruits and breast cancer classification Feb 28, 2020

- Create a specific feed in your Jekyll blog Feb 21, 2020

- Git/Github for contributing to package development Feb 14, 2020

- Feedback forms for contributing Feb 7, 2020

- nnetsauce for R Jan 31, 2020

- A new version of nnetsauce (v0.3.1) Jan 24, 2020

- ESGtoolkit, a tool for Monte Carlo simulation (v0.2.0) Jan 17, 2020

- Search bar, new year 2020 Jan 10, 2020

- 2019 Recap, the nnetsauce, the teller and the querier Dec 20, 2019

- Understanding model interactions with the `teller` Dec 13, 2019

- Using the `teller` on a classifier Dec 6, 2019

- Benchmarking the querier's verbs Nov 29, 2019

- Composing the querier's verbs for data wrangling Nov 22, 2019

- Comparing and explaining model predictions with the teller Nov 15, 2019

- Tests for the significance of marginal effects in the teller Nov 8, 2019

- Introducing the teller Nov 1, 2019

- Introducing the querier Oct 25, 2019

- Prediction intervals for nnetsauce models Oct 18, 2019

- Using R in Python for statistical learning/data science Oct 11, 2019

- Model calibration with `crossval` Oct 4, 2019

- Bagging in the nnetsauce Sep 25, 2019

- Adaboost learning with nnetsauce Sep 18, 2019

- Change in blog's presentation Sep 4, 2019

- nnetsauce on Pypi Jun 5, 2019

- More nnetsauce (examples of use) May 9, 2019

- nnetsauce Mar 13, 2019

- crossval Mar 13, 2019

- test Mar 10, 2019

Comments powered by Talkyard.