As a reminder nnetsauce, mlsauce, the querier and the teller are now stored under Techtonique – for those who have already starred, you can report your stars there! A new Techtonique website is also out know; it contains documentation + examples for nnetsauce, mlsauce, the querier and the teller, and is a work in progress.

Figure: New Techtonique Website

Figure: New Techtonique Website

In addition, a new version of nnetsauce including nonlinear Generalized Linear Models (GLM) has been released, both on Pypi and GitHub.

- Installing by using Pypi:

pip install nnetsauce

- Installing from Github:

pip install git+https://github.com/Techtonique/nnetsauce.git

I’ve been experiencing some issues when installing from Pypi lately. Please, feel free to report any issue to me, if you’re experiencing some, or use the GitHub version instead. You can still execute the following jupyter notebook – at the bottom for nonlinear GLMs in particular`– to see what’s changed:

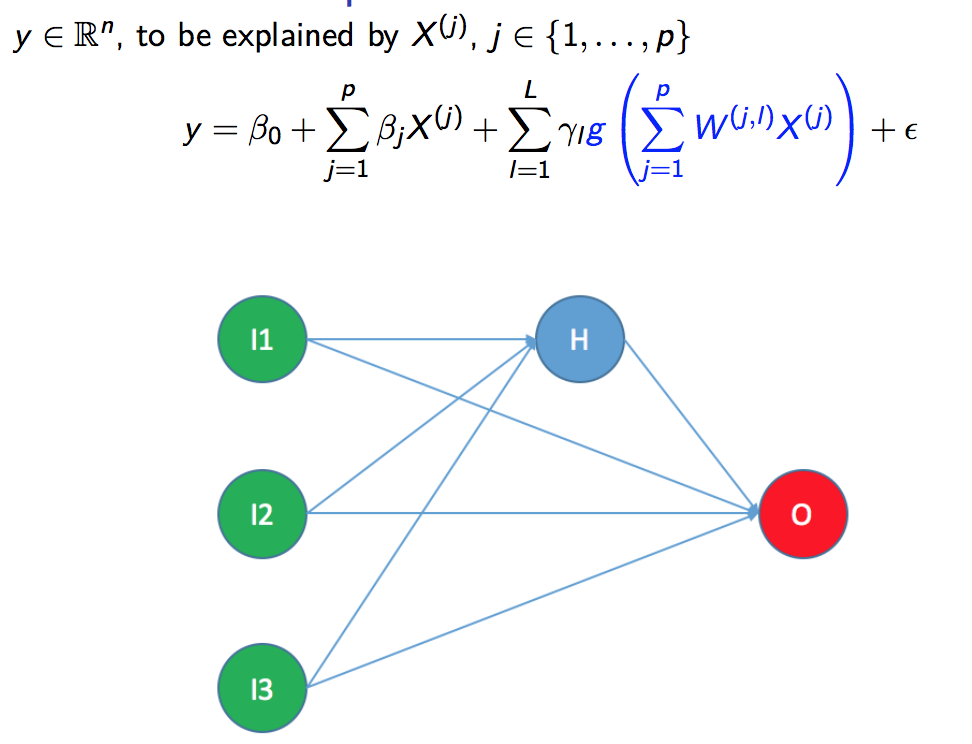

How do these nonlinear GLMs work? Let’s say (a highly simplified example) that we want to predict the future, final height of a basketball player, using his current height and his age. We’d use historical information of heights, ages (covariates), and final heights (response) of multiple players:

1 - Input data, the covariates, are transformed into new covariates, as we’ve already seen it before, for nnetsauce. You can visit this page for a refresher: References.

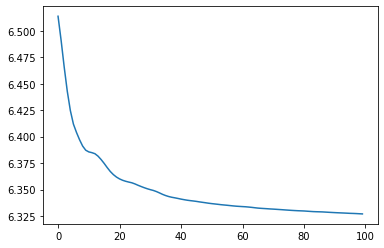

2 - A loss function, a function measuring the quality of adjustment of the model to the observed data, is optimized as represented in the figure below. Currently, two optimization methods are used: Stochastic gradient descent and Stochastic coordinate descent. With, notably and amongst other hyperparameters, early stopping criteria and regularization to prevent overfitting.

Figure: GLM loss function (from notebook)

Figure: GLM loss function (from notebook)

I’ll write down a more formal description of these algorithms in the future.